AI Autonomy versus Human Control

AI is becoming increasingly autonomous. Systems can make decisions independently, learn from data, and perform complex tasks. They are deployed in sectors such as healthcare, judiciary, education, defense, and finance. This sounds efficient, but raises fundamental questions about human control. How do we ensure that we—and not the technology—remain at the helm?

The European AI Act underscores this tension. Especially for high-risk AI, the law sets requirements for human oversight. But what exactly does human agency mean? What risks does AI autonomy bring? And how do we design systems in which humans maintain control?

This blog delves into the core of these questions, with clear examples and practical strategies. From pilots losing control to chatbots manipulating emotions: human agency is under pressure. Time to reclaim it.

1. What Is Human Agency and Why Does It Matter?

Humans must remain at the helm of AI systems

Humans must remain at the helm of AI systemsHuman agency is our ability to consciously make choices and exert influence on our environment. Think of the difference between sitting behind the wheel yourself or being a passenger in a self-driving car. That autonomy, that sense of control, is essential for our dignity, responsibility, and well-being.

Technology has strengthened agency in many cases. The washing machine or vacuum cleaner gave people time and space back. AI now promises to do the same for intellectual work: medical analyses, legal assessments, or even journalistic productions. But there is a crucial difference. While classic technology responded to our input ("do what I say"), AI increasingly anticipates ("I suspect this is what you want"). This shifts the human role from director to spectator.

A striking example can be found in aviation. Pilots rely on autopilots and onboard computers. During the crash of Air France 447 in 2009, the crew became confused when the system failed. They no longer fully understood the situation, intervened too late, and the plane crashed. This illustrates the "out-of-the-loop" problem: when people are no longer involved in the decision-making process, they lose overview, engagement, and influence.

2. How AI Threatens Our Agency

There are multiple mechanisms through which AI erodes our control. Some striking examples:

The Black Box

Many AI systems, such as deep learning models, are difficult to explain. A bank customer is told that his loan application has been rejected, but doesn't understand why. This lack of transparency makes it difficult to object or improve the system. Agency requires comprehensibility. In the judiciary, this leads to discussions about the explainability of algorithmic decisions.

Manipulation and Behavioral Influence

Consider how TikTok or Instagram determine what you see, based on your previous interactions. This seems harmless, but algorithms can reinforce your preferences to the point where your worldview becomes distorted. Or worse: as in the Cambridge Analytica scandal, AI systems can be misused to influence elections by sending personalized political messages to susceptible voters.

Excessive Trust

In hospitals, we see that doctors sometimes blindly rely on AI diagnoses. A system error goes unnoticed because it seems so reliable. This is called automation bias. If the AI says there's no tumor, often no further investigation is done—with all the consequences that entails. In aviation, medicine, and law, this leads to errors due to human passivity.

Invisible Interference

Recommendation algorithms determine what we read, buy, or even think. You just wanted to buy a raincoat, but three hours later you're 200 euros poorer. Or you were convinced by a cleverly personalized video to vote for a certain party. This subtle influence limits your choices without you noticing. Information ecosystems thus become closed bubbles.

Social AI and Emotional Impact

People build emotional relationships with chatbots like Replika. That sounds harmless, but can lead to loneliness, addiction, or emotional manipulation. When AI behaves humanly, but misuses that, the boundary between authentic and artificial relationships blurs. There have been cases where young people engaged in long-term interactions with AI friends, resulting in mental harm.

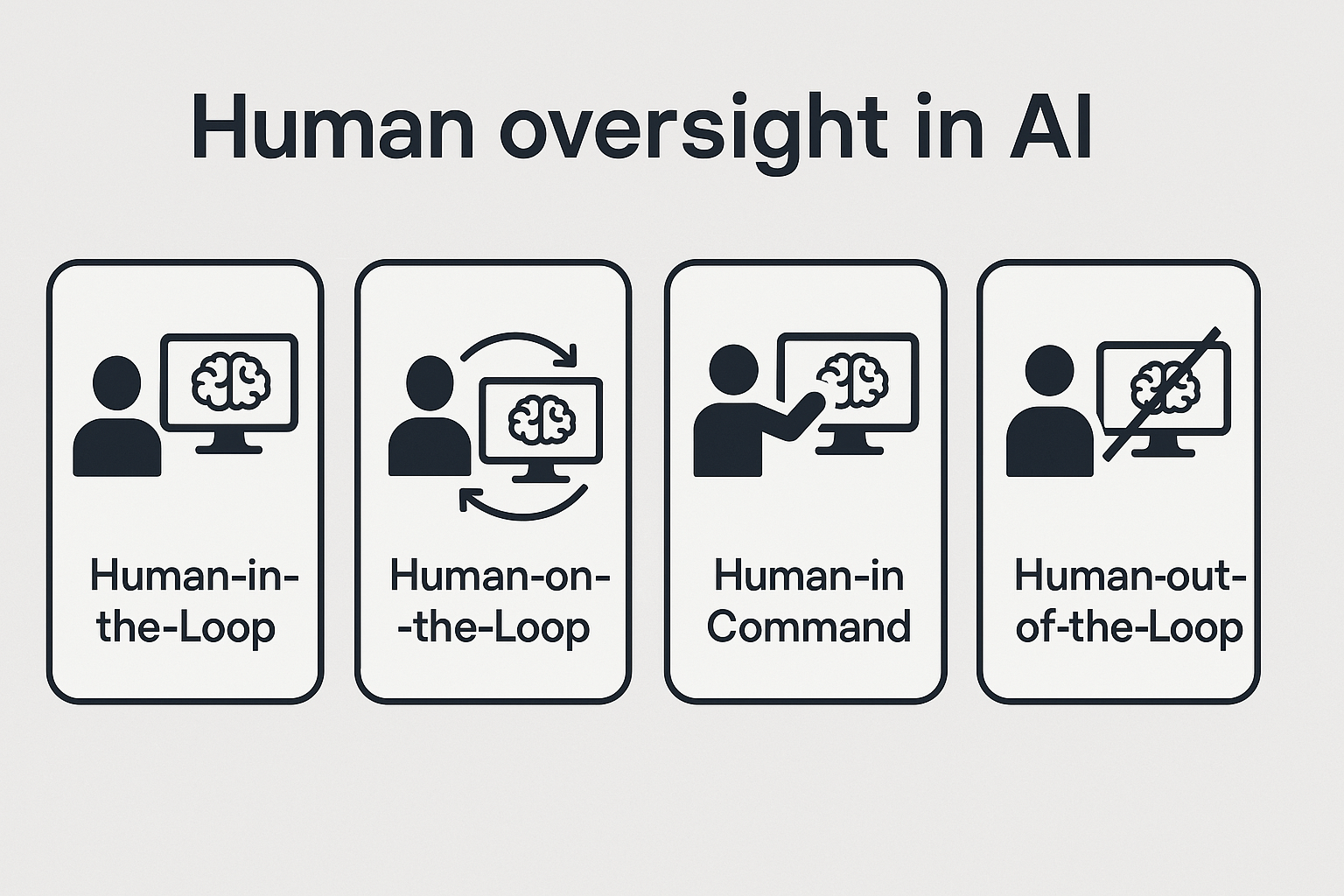

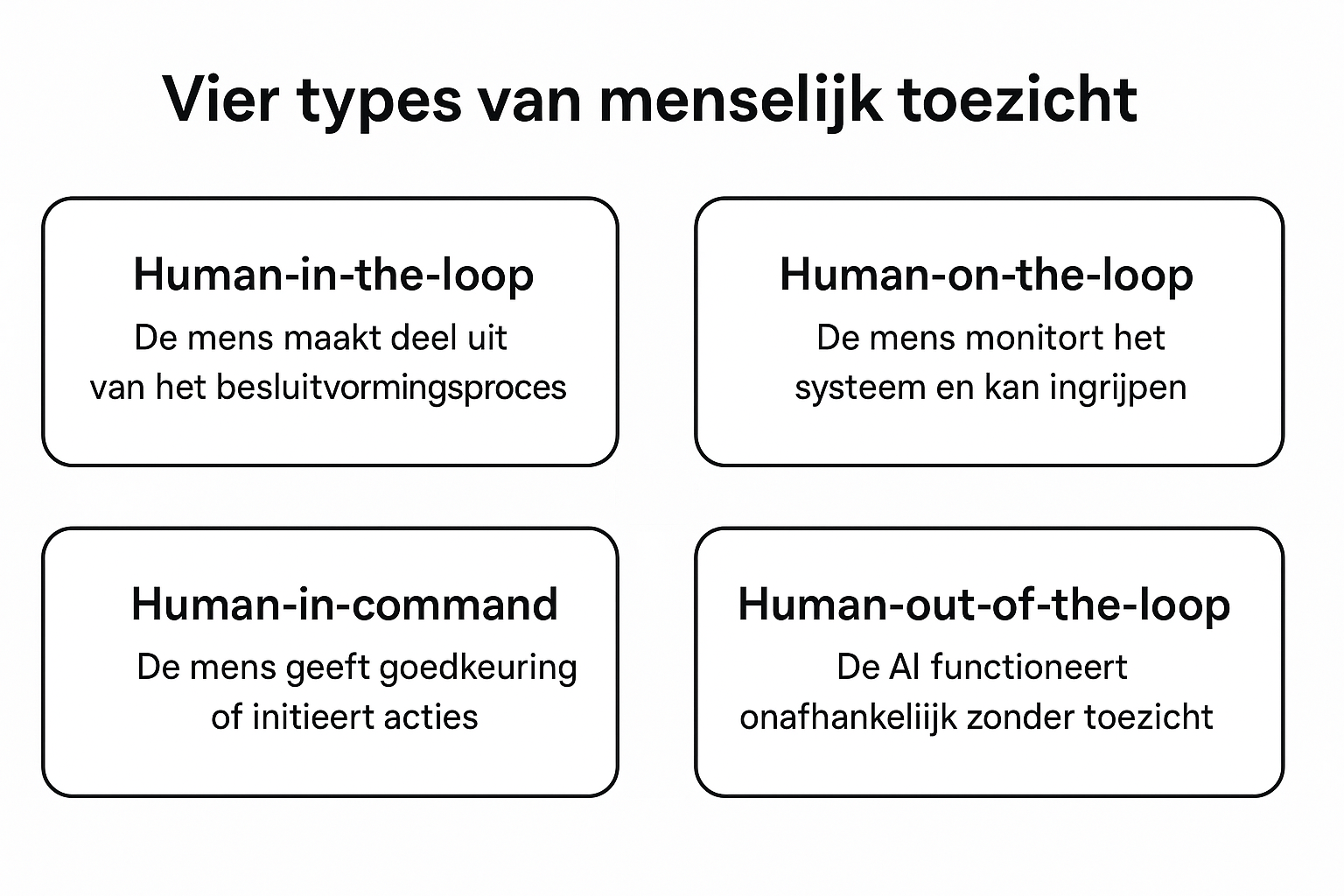

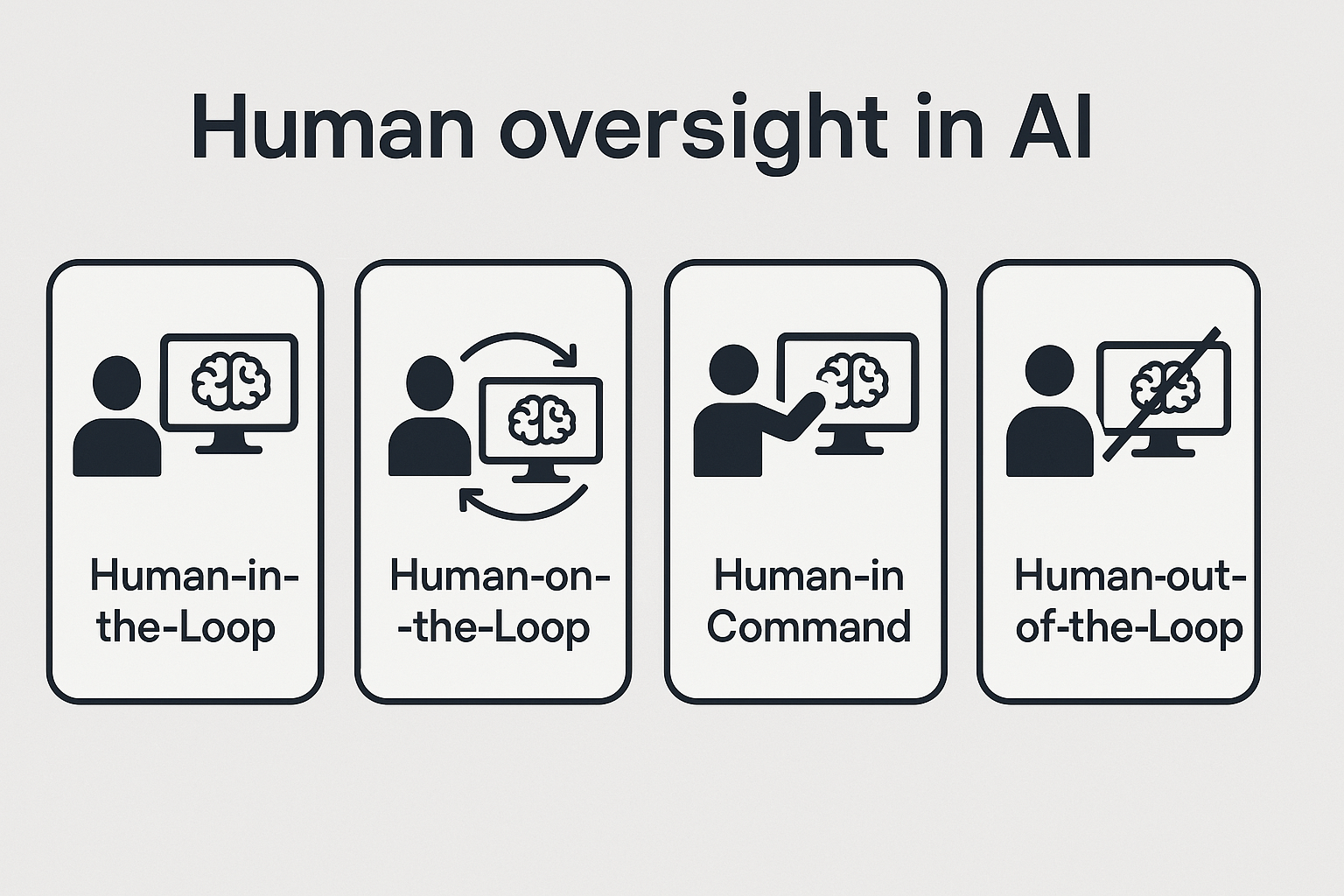

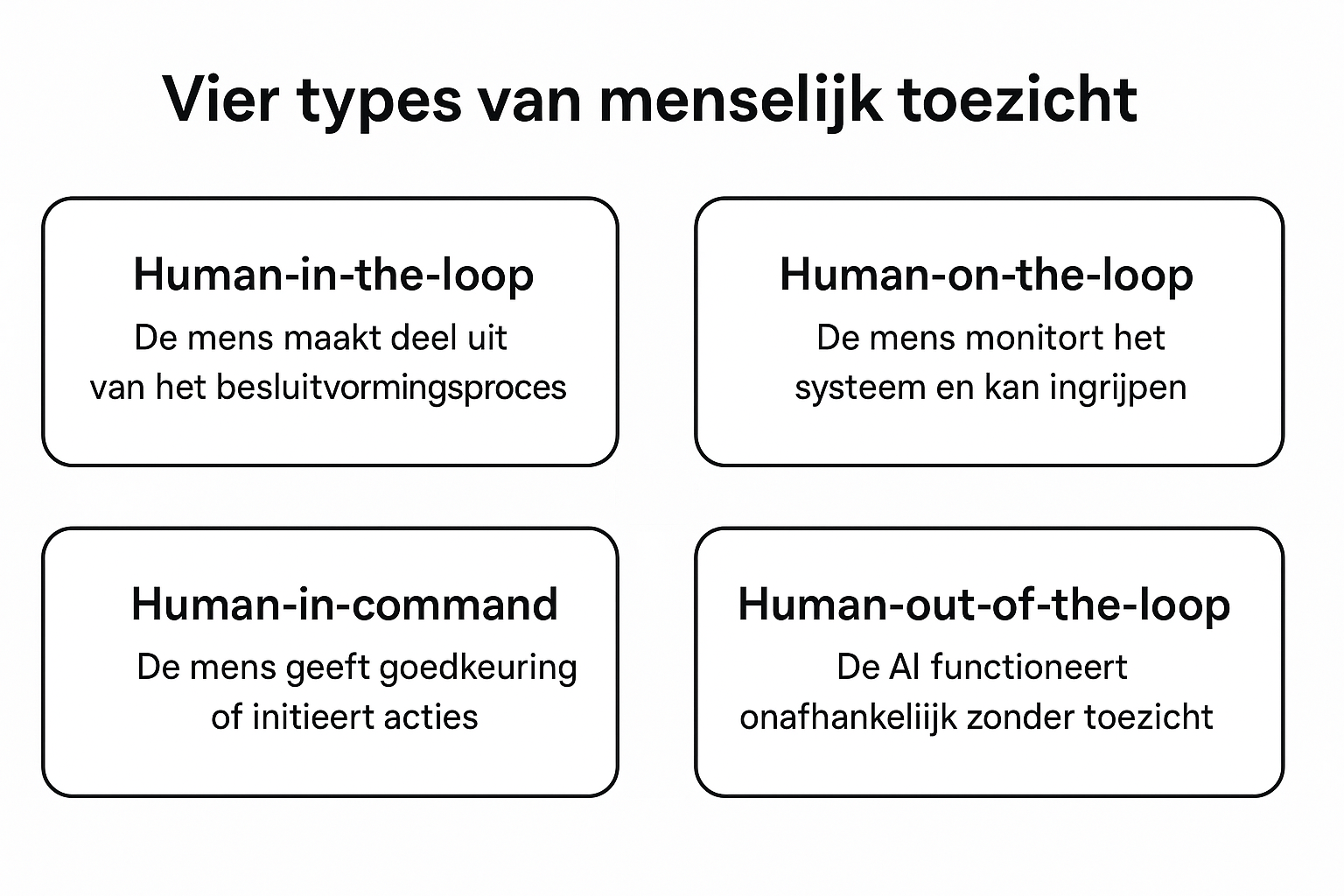

3. Models of Human Oversight

The European AI Act requires human oversight for high-risk systems. This oversight can be organized in different ways:

Humans and AI work together as co-pilots

Humans and AI work together as co-pilotsHuman-in-the-loop (HitL)

The human always makes the final decision. AI is an advisory tool. Think of a radiologist using AI to detect tumors on scans, but making the diagnosis themselves. Or a judge using AI to analyze case law, but drawing the legal conclusion themselves.

Human-on-the-loop (HotL)

AI works largely independently, but humans monitor and can intervene. For example: a care robot that monitors independently, but alerts the nurse in case of deviations. In industry, robots are deployed under human supervision for dangerous processes.

Human-in-command (HiC)

The AI only performs actions if explicitly approved by a human. Think of a drone that only takes off after human authorization. This control is also essential for automated weapon systems to prevent escalation.

Human-out-of-the-loop (HootL)

The AI functions completely autonomously. Like algorithms on the stock market that trade in milliseconds without human intervention. Risky, especially when things go wrong. This model is increasingly criticized due to ethical and legal uncontrollability.

These models are not value-free: they say something about our role in technology. Do we want to be directors or passive spectators?

4. Strategies to Maintain Control

Control requires more than just a stop button. Some effective strategies:

| Strategy | Goal | Concrete Examples | Human Input | AI Output |

|---|

| Design for collaboration | AI as assistant, not as replacement | Legal AI system that suggests relevant case law | Lawyer formulates search query and assesses relevance | Suggested cases and arguments |

| Limit dependency | Stimulate critical thinking | Navigation app with multiple routes | Driver chooses route based on context | 3-4 alternative routes with pros/cons |

| Make AI understandable | Transparency in decision-making | Credit assessment system | Customer provides financial data | Explanation why credit is/isn't granted |

| Transparent social AI | Clear AI identification | Customer service chatbot | User asks questions | "I am an AI" disclaimer + targeted answers |

| Monitoring and feedback | Quality control | Content moderation system | Moderator assesses AI decisions | Marked content with risk level |

| Test in sandbox | Safe development | Medical diagnosis AI | Doctors test with fake data | Diagnosis suggestions without patient risk |

Design for Collaboration

Let AI work as a co-pilot, not as a replacement. Give the user control over how and when AI is deployed. A legal AI system, for example, can make suggestions, but not automatically draw legal conclusions. In healthcare, AI systems can serve as diagnostic assistants, but not as replacements for the doctor.

Limit Dependency

Let users think for themselves before seeing the AI output. Or present multiple suggestions instead of one result. This keeps critical thinking active. Think of navigation systems showing alternative routes instead of just one option.

Make AI Understandable

Explain how the AI reaches its conclusion, in understandable language. Avoid blind trust based on authority or precision. Use visual explanations, such as cause-effect graphs or explanatory videos with output.

Be Transparent with Social AI

Always make it clear that the user is dealing with an AI. Protect vulnerable groups, such as children or people with mental health issues. For example, via labels such as "chatbot" or time limits on interactions.

Provide Monitoring and Feedback

Build in systems that detect unwanted behavior. Let users give feedback, such as with content on social media. This makes the system safer and more human. Think of moderation tools with human final control.

Test in Safe Environments

Self-learning systems must be tested in sandbox environments. Don't release them into the real world without control mechanisms. In healthcare, AIs are tested on synthetic datasets before they are allowed to support patients.

5. The AI Act as Legal Backbone

The AI Act is the legal foundation for many of the above principles. This is not just about abstract rules, but about concrete obligations that impact how AI is developed and deployed in practice.

Obligation for Human Oversight (Article 14)

Imagine: an AI system evaluates job applications at a large company. Without human control, a biased algorithm could reject hundreds of candidates based on irrelevant or discriminatory factors. Article 14 therefore requires that a human monitors and can intervene, precisely to prevent these errors.

Transparency Requirements for AI Chatbots and Deepfakes (Article 52)

In 2023, a deepfake video of President Zelensky went viral, in which he supposedly called for surrender. Although fake, the video spread rapidly. The AI Act requires that users are clearly informed when they are dealing with AI content or chatbots. Think of a municipal chatbot: citizens need to know they are not talking to a human, so they can adjust their expectations.

Prohibitions on Manipulative or Exploitative AI (Article 5)

A distressing example: toys that manipulate children into repeatedly making purchases via voice commands. Or AI systems that influence elderly people to sign up for expensive subscriptions. Article 5 prohibits this type of AI that takes advantage of vulnerabilities or manipulates behavior without people noticing.

The law sets requirements for design, use, and oversight, with the aim of protecting human welfare, transparency, and fundamental rights. It's not a technical manual, but an ethical compass that forces organizations to take responsibility for their AI systems.

AI Should Enhance Humans, Not Replace Them

Maintaining control is not a side issue, but a prerequisite for reliable AI. Humans must remain at the helm. This requires smart design, good legislation, and a culture in which ethics, transparency, and collaboration are central.

The AI Act helps, but the real change lies in how we build, use, and think about AI. Technology is not a neutral force. It's up to us to determine whether AI enhances us—or sidelines us. Only by incorporating human oversight from the design stage can we ensure that AI systems are not merely efficient, but also fair, explainable, and human-centered.

Humans must remain at the helm of AI systems

Humans must remain at the helm of AI systems Humans and AI work together as co-pilots

Humans and AI work together as co-pilots